by dbward | Feb 10, 2020 | Uncategorized |

Innovation is one of those words that gets used more often than it gets defined. And these days, it gets used a whole lot. In fact, it’s probably overused. But despite the popularity of the term (or maybe because of it) we run into a lot of confusion about what the word innovate even means.

Let’s clear that up today, shall we?

Team Toolkit uses a pretty simple definition of innovation. It’s just three words: “NOVELTY WITH IMPACT.” We like this definition for several reasons.

First, it’s simple, clear, and short enough to be memorable. That’s important, because a definition people can’t understand or remember isn’t very helpful, is it?

Second, “novelty with impact” is broad enough to be applicable in a wide range of situations. For example, we might talk about novel technologies or novel processes, new organizational structures or new methods of communication. We know novelty comes in a lot of different flavors, so our definition encompasses them rather than trying to list them (e.g. “Innovation is any new process, technology, solution, product, etc…”).

Incidentally, we tend to take a broad, inclusive understanding of the word novelty. The thing doesn’t have to be new to the whole world to count as novel. If it’s new to us, or new to our domain, that counts.

Similarly, the word impact might refer to saving time or saving money, increasing effectiveness or improving efficiency. It might refer to solving a problem, making an improvement, changing the marketplace or revolutionizing the battlespace. Rather than trying to pack our definition with a comprehensive list of all that stuff, we just refer to all forms of positive difference making as “impact.”

Third, “novelty with impact” helps distinguish innovative things from non-innovative alternatives. How can we tell the difference between an innovative product and a standard offering? The innovative product is novel. And what’s the difference between an innovative product and something that is merely creative? The innovative product has an impact.

But the best thing about this definition is that it points to two very important questions: What novelty are you trying to introduce? What impact are you trying to have?

Answering those two questions is a great way to get clarity about the sort of innovation we’re trying to introduce to the world.

For example, this blog post is offering a new definition of innovation (Novelty!), to help people better understand what innovation is and to build their innovation strategies (Impact!).

Which brings us to you. What sort of novelty are you trying to introduce in your arena? What sort of impact are you trying to have? Answer those questions and you’re well on your way to doing it.

by dbward | Jan 6, 2020 | Uncategorized

Our previous blog post looked at the Rose, Bud, Thorn canvas, one of the most popular tools in the kit. This week’s post continues on that theme, but with a bit of a twist.

A few months back one of our ITK facilitators received an interesting request from a potential toolkit user. They said they were open to the idea of using the Rose, Bud, Thorn with their team, but only if we could create a militarized, hypermasculine version. They said the floral imagery inherent in this tool made them uncomfortable, and they suggested perhaps something like “Gun, Knife, Bomb” might work better.

Um, that’s a big nope. We’re not doing that.

As a general rule, Team Toolkit aims to meet people where they are. We customize tools all the time, to make sure they fit well with the specific language, preferences, and inclinations of the people we are trying to help. We think it’s good to modify our vocabulary and make sure our language choice is as clear as possible. If a word has different meanings in different domains, we make every effort to ensure we’re using the right one for the environment.

For example, with some folks we might talk about mission and purpose rather than objectives and goals. Similarly, when working with military organizations we tend to not use terms like “profit” or “sales,” because those terms aren’t generally relevant within DoD organizations.

However, changing a tool’s name to accommodate narrow biases and insecurities does not do anyone any favors. And to be quite clear, this team was not asking to rename RBT because they come from a post-agrarian society and can’t relate to botanical metaphors. No, they asked for the change because they explicitly felt flowers aren’t manly enough.

Here’s the thing – if you can’t handle a flower-based metaphor because it seems too girly, you’re going to have a really hard time doing anything innovative. I promise, accommodating this team’s request would have limited their ability to be innovative, not enhanced it.

Team Toolkit’s mission is to “democratize innovation.” We are here to help people understand what innovation is and how to do it. This necessarily involves expanding people’s horizons, introducing new metaphors, building new connections, and taking teams beyond business-as-usual methods and symbols. Allowing teams to stay in their narrow comfort zone is the very definition of non-innovation.

So yes, we love to customize our tools, but not if those changes make the tools less effective. Not if the changes narrow people’s mental aperture rather than expanding their horizons. And definitely not if the change reinforces lame stereotypes.

And for those of you who ARE open to a flower-based innovation tool, can I suggest also checking out the Lotus Blossom?

by Aileen Laughlin | Oct 7, 2019 | Uncategorized

Why is being user-centered such a challenge in the DoD?

U.S. Army photo by Pfc. Brooke Davis, Operations Group, National Training Center

I first asked myself this question while working Human Systems Integration (HSI) as a Systems Engineer for a large defense contractor. As I explored this difficult topic, I realized I might have more luck driving change from the other side of the table. So, I made my way to a FFRDC to work more directly with the Government and help shape programs and projects to be more user centered.

Six years later, I’m happy to report that Cyber is the new thorn in everyone’s side! Jokes aside, user-centered design has not yet achieved its full potential. While I’ve seen more programs understand and embrace user-centered design than before, it is still frustrating that so little has changed in the larger environment. Every program I join struggles to bring human-centered practices to bear.

The challenges I most often see: missing or cryptic SOW statements, outdated / incorrect MIL-STDs, insufficient expertise / staff planning, and no or poor requirements. In the worst cases, only a handful of people on the program understand the user’s actual mission, and even fewer have ever spoken with users.

There’s a lot we can learn from commercial industry on this point. Commercial products that succeed tend to have a strong customer-focused value proposition – in DoD terms, they help users accomplish a mission. Delivering a successful product or service requires knowing your customers, the jobs they need to get done, and the associated pain points and gains. That’s true no matter the domain or industry — involvement with users should not be limited to generating requirements. It should not be treated as a contractual checkbox to fulfill, nor as the sole responsibility of an expert or team. Instead, it should be early, frequent, and meaningful. Being user-centric is how the commercial world works because it has an impact on their bottom line. We see this in manufacturing and software alike, where user engagement is a core principle of commercial practices like agile and Lean.

And yes, I understand that traditional DoD acquisition approaches can be limiting. However, it is possible to make designing for and engaging with users a part of how we work. Start by considering these questions:

- If we’re so risk adverse and cost conscious, why aren’t we be making user-centered design a non-negotiable part of our approach?

- Why do we spend SO MUCH TIME writing limiting and insufficient requirements? What might a Minimum Viable Product (MVP) requirements document look like for your program?

- As we read proposals for design and development, shouldn’t we be looking for appropriate expertise and design process-related words like research, prototype, and iterations?

- Why don’t we kick off programs with mandatory visits to engage with users?

- Why don’t we leverage user ingenuity and “field fixes” as valid sources for upgrading systems?

- Why can’t we have multiple user assessments during discovery and design? Why wait until test?

- Why aren’t we inviting or incentivizing or making opportunities for users to be part of engineering teams (e.g., Kessel Run) or directly tapped for ideas and solutions (e.g., AFWERX)?

- In a nutshell, why do we keep doing business this way?

If you haven’t heard, there’s some good news on this front. The AF recently appointed a Chief User Experience Officer. I’m excited to see what that will mean for the Air Force. As an Army Brat, I wish we had named one first. (Call me Army – I want to help Beat Navy!) I’m still holding out hope that the other services will follow suit and, more importantly, that the DoD taking a Warfighter-centric approach to research, engineering, design, acquisition, and sustainment shifts from being novel to our new normal.

by Niall White | Sep 16, 2019 | Uncategorized |

Remember the days of building a sandcastle in a sandbox, or creating a spaceship out of an old egg carton and tinfoil? These are examples of Minimum Viable Products (MVPs) and/or Prototypes!

Perhaps you didn’t have the money to buy the latest Millenium Falcon when you were a kid so you used the resources readily available to you to build something that shared the same vision: The power of space travel in the palm of your hands. This vision likely inspired others to make a similar investment, ask you how you did it, and carefully monitor the groceries brought in from the car. These same principles scale to a real-life next generation space transport, designed to carry a crew to Mars.

In the world of Agile software development, “DevSecOps”, and Design Thinking, its not uncommon to hear the terms Minimum Viable Product (MVP) and Prototype. You may even hear them used interchangeably, but is this correct? Let’s take a look.

An MVP is a product with a minimized feature set, usually intended to demonstrate vision while reducing the developer’s risk. Precious resources are saved by creating a smaller set of capabilities or information. Focusing solely on providing some initial value to users allows developers to test their assumptions and demonstrate basic market viability before making significant investments of time and money.

In some circles, a prototype is a representative example of the desired end-product or service. These can be incredibly useful for demonstration, testing, and getting buy-in from stakeholders. In other circles, prototyping is used for quickly assessing the viability of an idea using lower-than-production-quality materials (ex. using a paper towel roll as a lightsaber handle …). The latter interpretation of prototyping is perhaps more in line with the intended meaning of MVP, as coined by Frank Robinson and made popular by Steve Blank and Eric Ries.

One important thing to note is that both MVP’ing and Prototyping are best done iteratively. Don’t make just one MVP or prototype. Create several, each of which builds on the lessons from the previous ones.

Depending on the environment, it can be important to note the differences. Perhaps you find yourself in an organization where the term “prototype” has centered around the shiny representative final product. If you bring up rapid prototyping to weed out various risks, you may find yourself under the proverbial pile of rotten tomatoes. On the other hand, you may need to be careful using the term MVP. Some may have bad experiences where an MVP was too “Minimum” and not enough “Viable Product,” such that it turned off potential investors.

Can your egg carton be considered a prototype? Certainly! Just remember that there may be some “Doc Browns” out there, apologizing to Marty for the scrappiness of his model of downtown Hill Valley, complete with miniature DeLorean. You may have to explain that your use of “prototype” is not the same as a production ready representative example.

Follow these links if you’d like to learn more about MVP’s and Prototypes.

by Aileen Laughlin | Sep 9, 2019 | Uncategorized |

If you ask anyone on the ITK team (or really most people I work with), they’ll tell you I am always sharing articles. I recently came across this article about one person’s progression from sweeping floors to executive leadership, and instead of just emailing it to some friends I thought I’d write about it for the ITK blog.

The article appeared in Inc magazine, and it’s titled This Kombucha CEO Hired a Man Who Spoke No English. He Is Now a Company Executive. It’s a terrific story about solving problems and working together – two big themes of Team Toolkit’s work.

The article is a great example of how having the right attitude can take you far in life. However, I really enjoyed imagining this gentleman’s humility, curiosity, desire to learn, and develop mastery as he progressed through different areas within the company. The CEO’s comment about generalist vs. expert and how despite not having a laundry list of degrees, this employee is always the first to solve a problem made so much sense! Openness and diversity in thought and experience are such important elements of solving problems and practicing innovation.

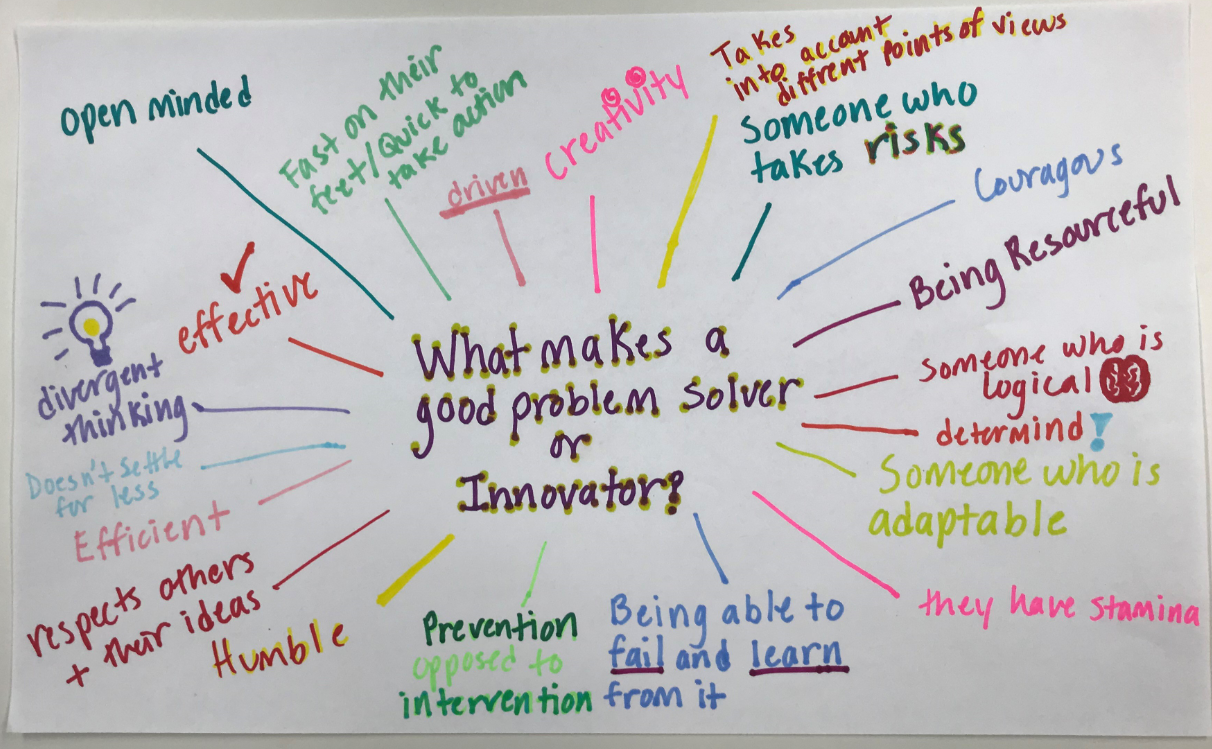

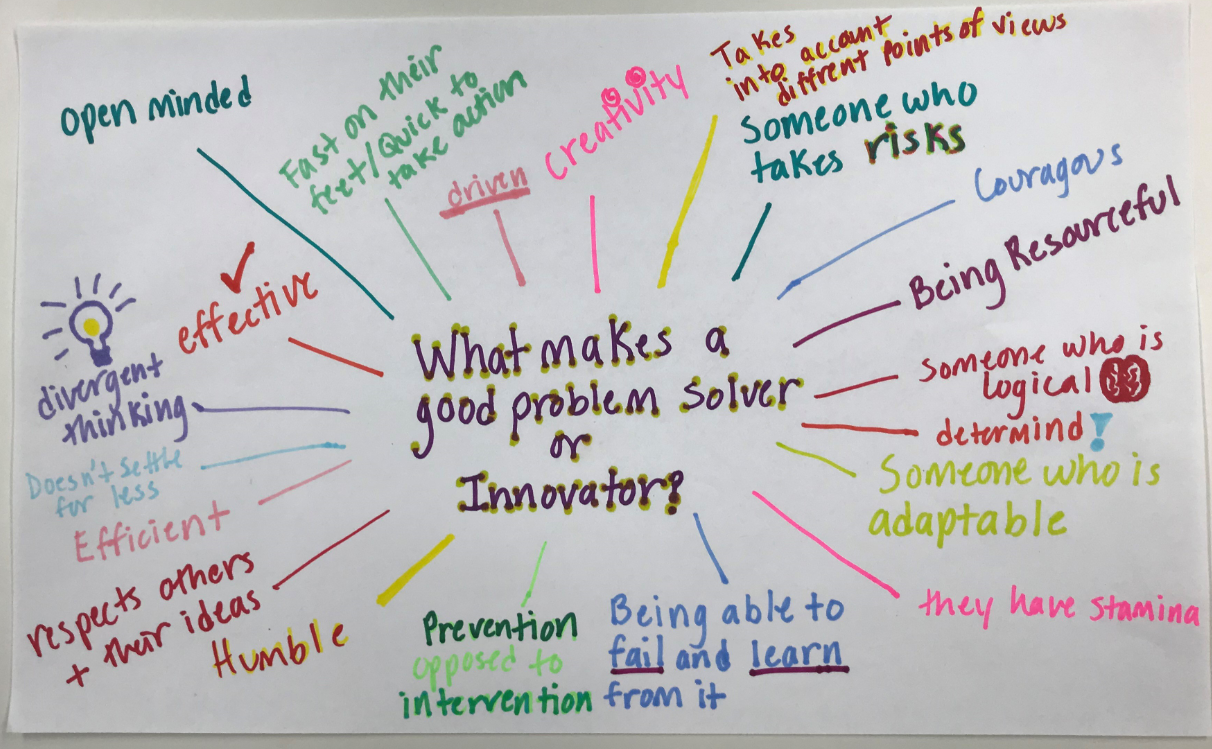

Team Toolkit thinks about this stuff a lot, and we’re always exploring ways to better understand it. At a recent event, we took a post-it poll asking, “What makes a good problem solver or innovator?” Our high-school intern, Niomi Martinez, turned the poll results into the Mindmap above.

We’d love to hear what you think – Anything missing from the list? Anything you agree with? Disagree with? Share your thoughts in the comments section below!

by dbward | Jul 22, 2019 | Uncategorized

Our Story From Last Week Continues…

Day Two: Brainstorming and Prioritizing Actions

At the end of the first day, the facilitation team met and affinitized the feedback we’d heard, grouping it into topics for the next day. Our intent was to form the participants into persistent teams of 5 or 6 people; these teams would move from topic to topic throughout the day.

We also noted which people had been helpful or disruptive in furthering group cohesion, and strategized how to ameliorate this. One of the VIPs, whose mere presence as a VIP was hampering conversation, solved our problem by recognizing that he should not be present for the second half.

The other critical tactic we used was to intentionally mix up the teams, so that each team had representation from two or more roles. Because folks had naturally sat with their ‘friends’, this was relatively easy to accomplish—we went around the room, table by table, and had everyone count off 1, 2, 3, etc. as their group assignment. This shuffled the groups nicely, resulting in a much more varied mix of people in each.

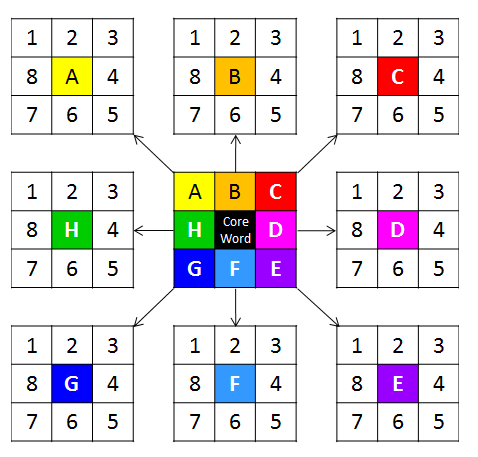

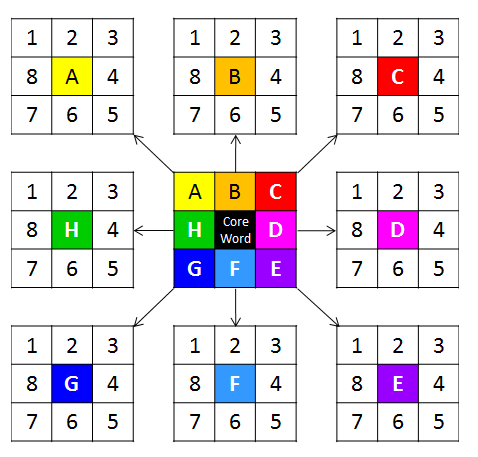

The Lotus Blossom encourages participants to brainstorm subtopics, and then dive into each subtopic to generate related ideas.

To gather ideas for possible actions, we used the Lotus Blossom method. The Lotus Blossom is a structured brainstorming tool that enabled the teams to ideate multiple solutions to the problems identified. Facilitators used “How Might We?” statements to help elicit ideas, shifting the focus from constraints to possibilities.

Using this method, we were able to generate around 40 potential solutions for each topic area in 50 minutes, successfully staying on-task without going too deep into any one topic or rat-hole. Because ideas are immediately written down on the Lotus Blossom worksheet, all participants can see what was brainstormed, and think laterally, rather than getting stuck on a particular topic.

Each table had a MITRE facilitator who helped fill out the diagram. This helped teams understand the context when coming to a new topic. Facilitators also kept the conversation moving, recording and reflected ideas back to the group.

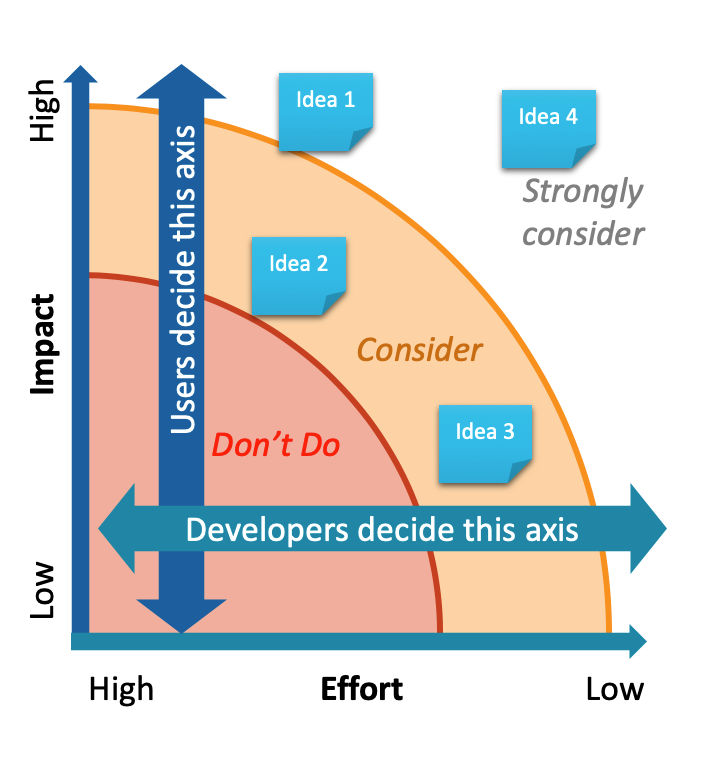

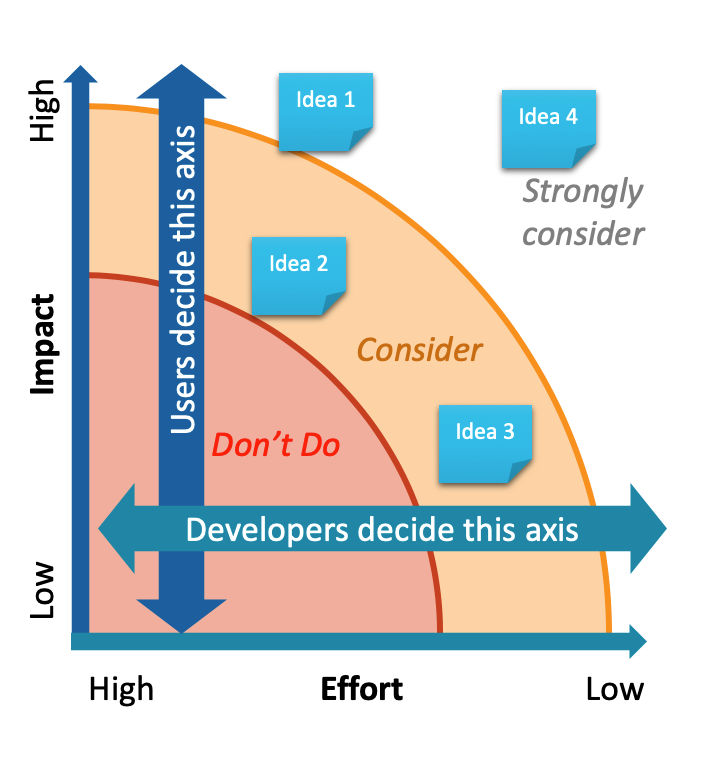

We also wanted the teams to prioritize their ideas. To do this, we used the Impact/Effort Matrix method, sometimes also called the “2×2 matrix” method. (See, e.g. https://www.groupmap.com/map-templates/impact-effort-matrix/ for more details).

The Value/Effort matrix is a technique from the Six Sigma toolbox that helps

The axes we chose reflected our conflicting populations: impact, which would be specified by end users, and effort, specified by the development teams. The resulting priority was a function of both inputs: high impact/low effort actions would obviously be prioritized above low impact/high effort.

Time Pressures = Progress

The last piece of our method was to use the clock. We had an abbreviated schedule on the second day: about 4 hours of work time available. We knew we wanted groups to rotate between topics, with later groups building on the work of earlier groups. But by the third or fourth rotations, groups would be coming to a large amount of context; in this situation, brainstorming often begins to dry up and get less productive.

We eventually settled on an accelerating schedule. Groups had 30 minutes to brainstorm and prioritize actions for their first topic; then, 20 minutes on their second, then 10. After each round, groups reported out to the entire room, to help promote coherence, but also to help the participants feel invested in these ideas.

When we noticed they were unengaged or seeming reticent, we asked or volunteered individuals to represent ideas to the group at large. This was extremely helpful; in some cases, people who had been ‘floating along’ and letting the rest of the group do the work ended up pulling out an idea and making it their own. This vastly increases the likelihood of it being actioned in the future. For example, a sysadmin realized that, even though her office had solved lots of process problems, other offices might not have, and that she could share her experience with other offices for an overall improvement.

>After three rotations, all topics had a sufficient number of ideas brainstormed and prioritized, and we stopped and reflected back our key findings to the group as a whole; we then let the group break up into social groups, to continue to build common ground.

Results

The data—captured both as notes and as pictures – became the input for a response document delivered to the sponsor, which was met with strong approval; we were able to reflect the pains and opportunities in the current system, and provide potential actions, all using the words and ideas of the sponsor’s own people.

An equally important outcome was the increase in team cohesiveness across locations and constituencies; folks left with an air of “we can do this together”, which was a desired but not guaranteed outcome. Breaking down silos of knowledge, and teaching developers and users how to communicate, are key means of improving the overall user experience of a system.

The work with the sponsor is currently on hiatus, but we have already been asked to run a similar session with additional sponsor representatives in the future.

Big thanks to guest bloggers Alex Feinman, Tom Seibert and Tammy Freeman for sharing this story!