Today’s post is the first in a series by guest bloggers Alex Feinman (with Tom Seibert and Tammy Freeman)

Sometimes, you have to mix and match methods to get the results you need. This story is example of how important it is to be able to modify and adapt the plan in response to new facts and constraints.

MITRE was called in to facilitate a conversation about replacing or upgrading a COTS piece of software that a sponsor was using. We put together a session that began with knowledge elicitation, to understand their domain; then focused on establishing user requirements; finally some progress toward conclusions and agreements on actions.

The sponsor sent close to 30 people to the two-day event. MITRE brought in a team of facilitators to help manage the large number of people.

While our team had some experience with the software in question, we also had more experience with other, better software. Hence, we were expecting to elicit the sponsor’s needs, as a step toward potentially guiding them toward a better option.

This plan, as they say, did not survive contact with reality.

The Map is Not the Terrain

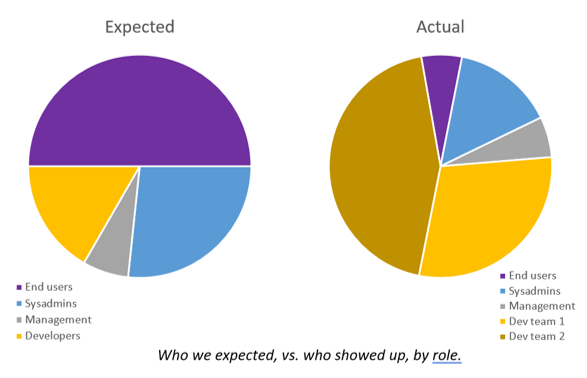

We were expecting our participants to be a mix of end users, ops/sysadmin folks, and program administrators. Instead, on arrival, we discovered that the bulk of attendees were developers—some sponsor-based, and some who were contractors.

The two sets of developer were working on competing systems, both used by the sponsor. End users had to switch between these systems to get their work done. In addition to this, we had only a few actual end users, plus a number of sysadmins, who sat between those two populations.

The group was on the edge of fracturing, with finger-pointing starting to become more apparent—the makers of each system were defending their turf and jobs, and beginning to think about bad-mouthing the other “team”. It was time to help these developers understand how things looked from the user’s perspective, and fast.

Day One: Finding Common Ground

After a quick consult, the facilitators decided to continue to gather data on the work context—getting a sense for what things were on everyone’s minds about the systems. Concerns by most users centered around ease of use issues, responsiveness (or lack thereof) from development teams, and annoyances related to switching between two different systems with similar purposes. Concerns from sysadmins and developers centered on deployment and provisioning, and licensing of third-party data sources.

We used dot voting to identify which concerns were foremost. This backfired slightly: nearly everyone voted for “merge the two systems”—a solution masquerading as a pain. Additionally, some near-duplicates needed to be merged. In retrospect, we could have used a round of affinitization or Stormdrainingto reduce the number of issues prior to voting.

Based on this finding, we decided to dive into understanding why the systems were currently separate, and whether there were any benefits there. A subgroup worked to put together a rough system diagram on a whiteboard wall. This revealed a huge amount of complexity hiding behind the user interface and helped legitimize the current split situation. One of the systems had strong processing capabilities, while the other had a much stronger UI. With this brought to light, we worked to help the group see that there might be a double-win involving pieces from both systems.

Armed with this data, we gave the group six areas to focus on, ranging from user workflows and UI issues to system administration, data ingestion, and data processing. This allowed the group to break up naturally by role, which was both helpful and harmful—helpful in that each group made progress, but harmful in that it did not work toward building group coherence. However, it was a necessary step toward group formation—letting the folks there understand that we were intending to listen to them, understand their situation, and help them prioritize their concerns.

Informal discussions, such as those happening over lunch, were critical to getting folks to buy into MITRE’s role as an unbiased facilitator. These conversations helped cement their trust that we weren’t there to put half of them out of work, weren’t there to promote one system over another, and certainly weren’t there to sell them on software we created.

We also invested time with particularly reticent folks to bring them around to seeing other people’s perspectives, and give them hope of open lines of communications. While often overlooked during use of ITK “methods”, these informal conversations can make or break a long event such as this one!

By day’s end, we had a commitment from everyone to keep working together and keep talking—a great start given where we’d begun.

Stay tuned for the rest of the story in next week’s episode!